今天在测试阿里通义千问Qwen-1_8B-Chat-Int8的模型的时候发生报错,部分错误信息如下:

Traceback (most recent call last):

File “.\request_llms\local_llm_class.py”, line 160, in run

for response_full in self.llm_stream_generator(*kwargs): File “.\request_llms\bridge_qwen_local.py”, line 46, in llm_stream_generator for response in self._model.chat_stream(self._tokenizer, query, history=history): File “.\huggingface\modules\transformers_modules\Qwen\Qwen-1_8B-Chat-Int8\2fb5c225fe592d8d891cbf6c1ed5924cabe48a18\modeling_qwen.py”, line 1214, in stream_generator for token in self.generate_stream( File “.\python3\lib\site-packages\torch\utils_contextlib.py”, line 116, in decorate_context return func(args, **kwargs)

File “.\python3\lib\site-packages\transformers_stream_generator\main.py”, line 208, in generate

] = self._prepare_attention_mask_for_generation(

File “.\python3\lib\site-packages\transformers\generation\utils.py”, line 473, in _prepare_attention_mask_for_generation

torch.isin(elements=inputs, test_elements=pad_token_id).any()

TypeError: isin() received an invalid combination of arguments – got (elements=Tensor, test_elements=int, ), but expected one of:

- (Tensor elements, Tensor test_elements, *, bool assume_unique = False, bool invert = False, Tensor out = None)

- (Number element, Tensor test_elements, *, bool assume_unique = False, bool invert = False, Tensor out = None)

- (Tensor elements, Number test_element, *, bool assume_unique = False, bool invert = False, Tensor out = None)

意思就是在 torch.isin() 函数中传入的参数类型不匹配导致的。torch.isin 期望的是两个张量(Tensor)参数或者一个数字和一个张量的组合,而传入的是一个张量(elements)和一个整数(test_elements)。

需要修改torch部分功能代码解决,还有另一种方法就是重新安装transformers,将版本降到4.41.0以下,可解决此报错问题

相关推荐

Python报错AssertionError: Torch not compiled with CUDA enabled解决方法

Python报错AssertionError: Torch not compiled with CUDA enabled解决方法 使用Nuitka将Python应用打包为windows的exe程序具体方法

使用Nuitka将Python应用打包为windows的exe程序具体方法 HuggingFace模型压缩打包时符号链接引起的文件夹过大问题解决方法

HuggingFace模型压缩打包时符号链接引起的文件夹过大问题解决方法 AttributeError: module 'torch.library' has no attribute 'register_fake'

AttributeError: module 'torch.library' has no attribute 'register_fake' ImportError: cannot import name 'builder' from 'google.protobuf.internal'

ImportError: cannot import name 'builder' from 'google.protobuf.internal' Gradio应用Radio组件设置默认值后选项不能选择的问题

Gradio应用Radio组件设置默认值后选项不能选择的问题 AttributeError: module 'psutil._psutil_linux' has no attribute 'getpagesize'

AttributeError: module 'psutil._psutil_linux' has no attribute 'getpagesize' safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooLarge

safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooLarge

最近更新

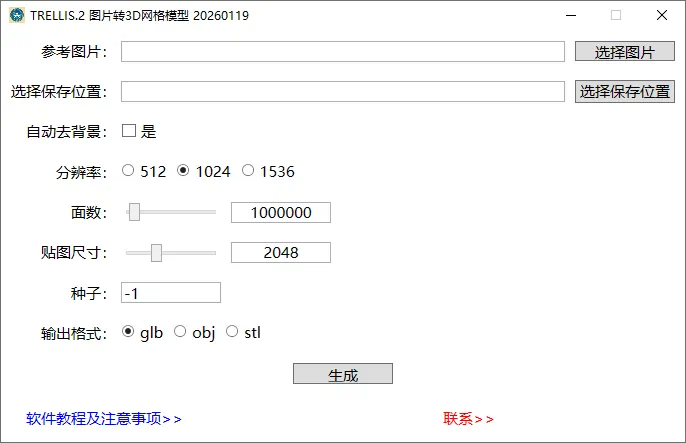

微软最新图片转3D网格模型软件TRELLIS.2 windows版整合包下载,AI一键建模工具

上个月微软发布了图片转3D网格模型软件TRELLIS的2.0版本。之前1.0版本非常受欢迎,当前2.0版本功能更强大,效果更好。我制作了最新windows版免安装一键启动整合包。 TRELLIS.2官方说明 TRELLIS.2 是一款最先进...

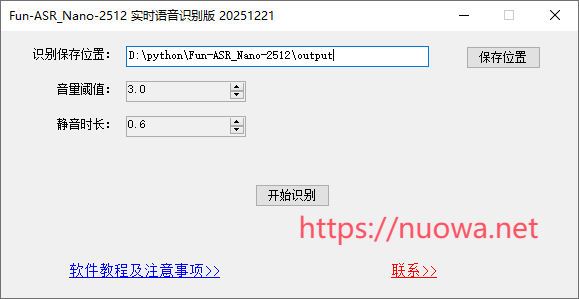

FunASR最新模型FunAudioLLM/Fun-ASR-Nano-2512实时语音识别转文字热词版整合包下载

Fun-ASR-Nano-2512是阿里通义实验室前天刚发布的最新最强的一款语音识别转文字模型,支持31种语言,延迟低,在某些专业领域表现出色。我基于FunAudioLLM/Fun-ASR-Nano-2512模型制作了最新实时语音识别转文字...

Nova数字人虚拟主播软件下载

这个还是2023年做的数字人项目,发现仍有人有这方面需求,我又重新做了一下。把其它所有功能都删除了,只保留了个音频文件驱动口型讲话的功能。 软件功能及用法 启动软件,点击右上角扳手按钮,打开设置界面,先选中一个主播人物,再选择导入一段音频文...

Crawl4AI:基于AI大语言模型的网络爬虫和数据抓取工具整合包软件下载

Crawl4AI是一款基于AI大语言模型能力的网络爬虫和数据抓取软件,可以将网页转换为简洁、符合 LLM 规范的 Markdown 格式,适用于 RAG、代理和数据管道。它速度快、可控性强。 Crawl4AI官方介绍 开源的 LLM 友好型...

browser-use浏览器任务全自动化AI助手windows电脑版一键启动整合包

本次再和大家分享一个非常牛逼的AI助手软件:browser-use,别问哪里牛逼,反正很多人都在用,社区starts高达72.8K,火遍全球的deepseek 100K,browser-use 72.8K,就问你火不火。之前我也分享过其它类...

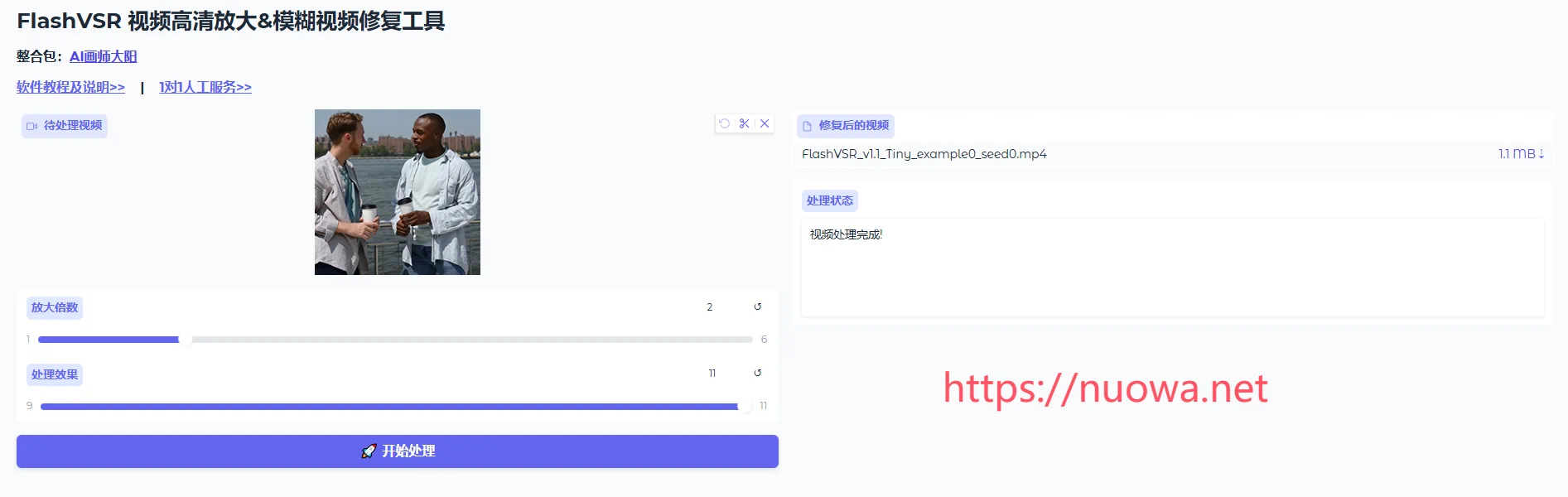

FlashVSR低分辨率模糊视频高清放大工具整合包v1.1下载,免安装一键启动

FlashVSR-一款高性能可靠的视频超高分辨率放大工具。迈向基于扩散的实时流式视频超分辨率——一种高效的单步扩散框架,用于具有局部约束稀疏注意力和小型条件解码器的流式VSR。 FlashVSR官方介绍 扩散模型最近在视频修复方面取得了进展...

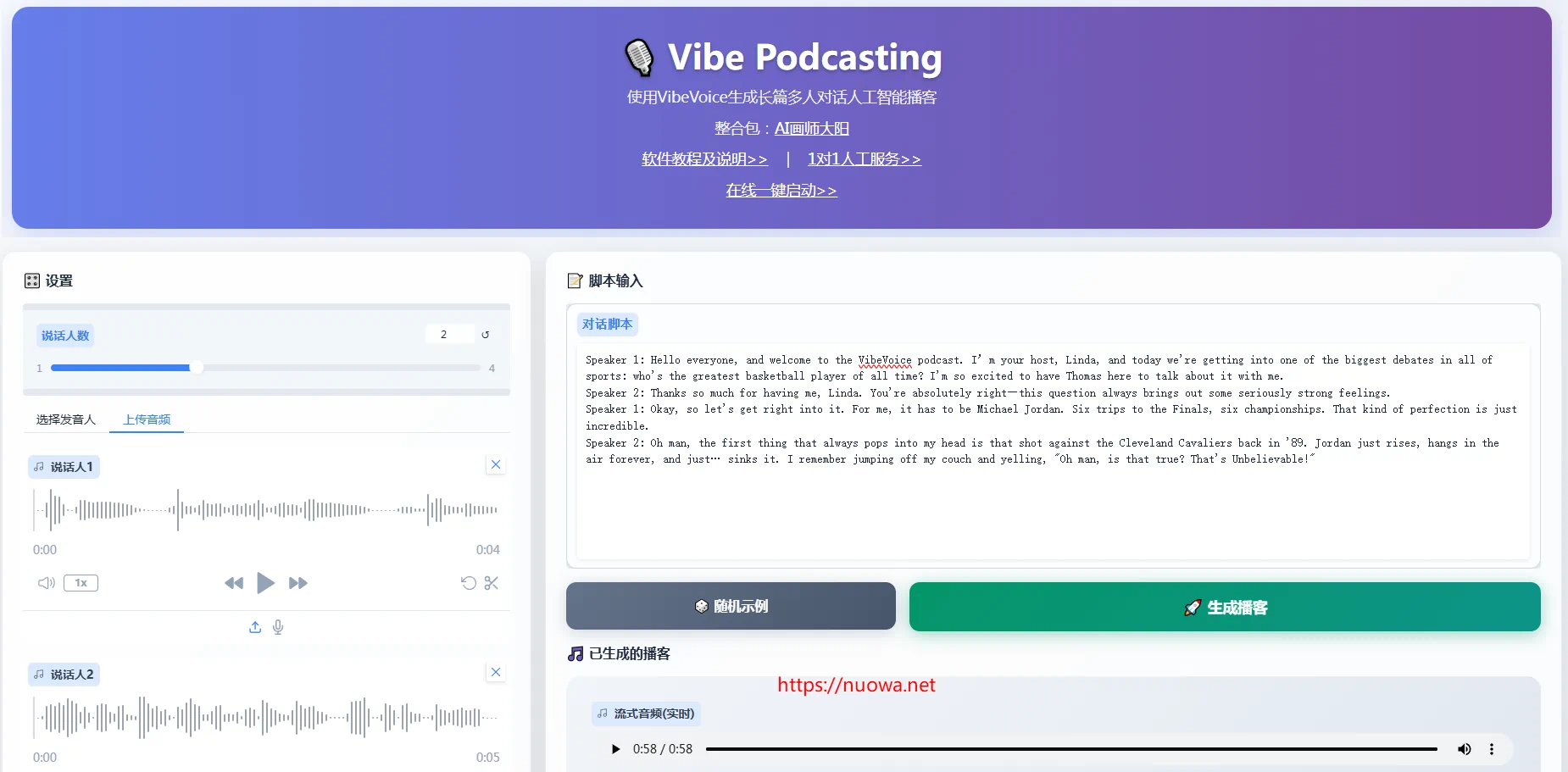

VibeVoice:富有表现力的长篇多人对话语音合成工具整合包下载

VibeVoice是微软开发的一款富有表现力的长篇多人对话语音合成工具。支持1-4个发音人,支持声音克隆自定义音色合成语音,我制作了最新的本地免安装一键启动整合包及云端在线版。 VibeVoice介绍 VibeVoice:一种前沿的长对话文...

多图编辑人物一致性图片合成处理工具Qwen-Image-Edit-2509整合包下载,人物换装换姿势动作软件

Qwen-Image 是一个功能强大的图像生成基础模型,能够进行复杂的文本渲染和精确的图像编辑。Qwen-Image-Edit-2509是Qwen-Image-Edit的月度更新版本,增加了多图编辑和单图一致性生成功能。 Qwen-Imag...

摸鱼神器windows电脑隐藏任务栏软件图标工具rbtray下载

本次和大家分享一个摸鱼神器rbtray。 windows电脑软件运行的时候会在屏幕底部的任务栏上有一个软件图标,如果你开了哪个软件又不想被别人看到你正在运行这个软件的话,那么这个神器rbtray就非常适合你了。 rbtray可以快速将软件图...

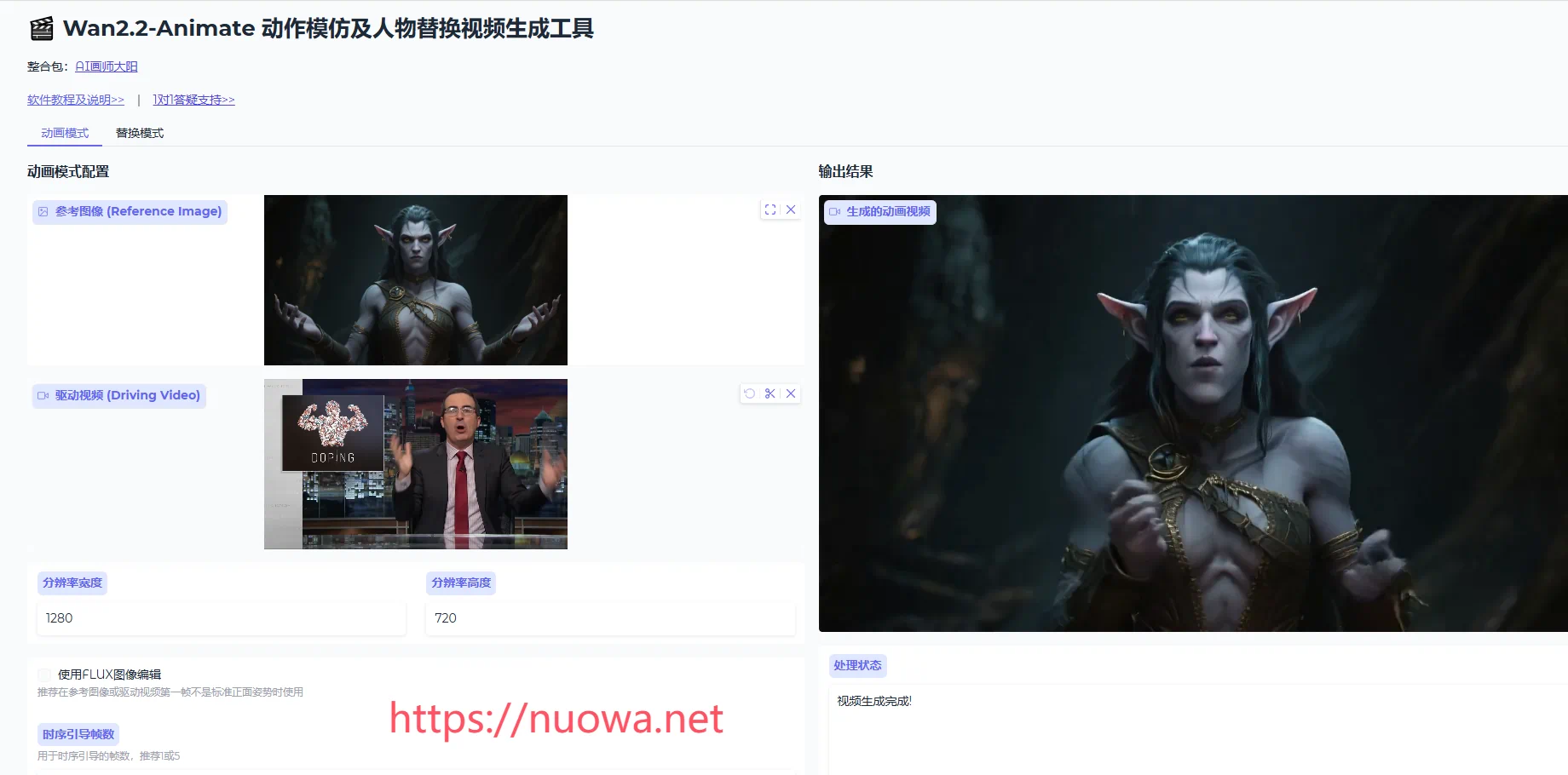

人物动作迁移及视频人物替换软件Wan2.2-Animate-14B整合包下载,动作模仿视频换主体工具在线一键启动

本次和大家分享一个非常强大的动作模仿及视频人物替换工具Wan2.2-Animate-14B,Wan-Animate接受一个视频和一个角色图像作为输入,并生成一个动作模仿或人物替换的视频,视频自然流畅,可玩性非常高。 Wan2.2-Anima...